This blog post will be a discussion of various general approaches to scenery and the trade-offs we have to consider, e.g. plausibility and realism, procedural vs. algorithmic and data driven design. But first, a brief note on Outerra. As I have said before, we are already aware of Outerra, so there is no need to email us. The bottom line is that we have a set of mostly done features for X-Plane 10, our goal is to finish X-Plane 10, and we are not even spending one brain cell considering putting a new rendering engine into X-Plane while we are trying to get 10.0 done.

Defining Some Terms

One of the problems with comparing scenery system approaches is that a real productized approach to scenery rarely fits into a perfect bucket or matches a single theoretical techniques. So here are some approximate terms, designed to generally describe an approach. They're not going to be perfect fits, and even the definitions will fluctuate in different contexts and forums.

- We can say scenery is plausible when it looks like it might exist somewhere in the world. Plausible means that roads don't go straight up over a cliff, trees don't grow in the ocean, etc. In other words, plausible scenery is scenery where absurd things don't happen. Plausible scenery is great when you don't know what an area should look like. A lack of plausibility is often a bug.

- We can say scenery is realistic when it correlates closely with what is really present at a given location on the Earth. So if there really is a lake behind my house, realistic scenery has that lake. Plausible scenery might have a lake, a forest, or something else believable for where I live (the Northeastern United States). A giant sandy desert would not be plausible for my location.

- We can say scenery is procedural if the detail in the scenery comes from some kind of algorithm that produces results. For example, a fractal coastline is procedural.

- We can say scenery is data driven when the detail comes from some source of external input data. Our mountains are currently data driven - that is, the mountain shape basically comes directly from the DEMs we use.

- We can say scenery is artist driven if the look of the scenery comes from art assets created by an art team.

- We can say scenery is algorithm driven if part of its look comes from the transformational process that converts data from one form to another.

So Are We Plausible or Realistic?

So the first question is: is the goal of X-Plane global scenery plausibility or realism? The answer is: a bit of both. Austin's posts on the subject virtually always bring up plausibility. The reason for this is simple: he is not too worried about the amount of realism we've put into the scenery, but he is not happy with the bugs. He wants the bugs gone. So every time he and I speak, he says "and make sure it's plausible!"

But we're not going to remove realism just to fix plausibility bugs. I expect that the next global scenery render will be at least as realistic as the last - that is, we're going to use better data and we're not going to make up data where we had real information before.

There are limits to realism. We don't expect the global scenery to ever be as realistic as a custom scenery package for a small matter. But realism does matter. Part of the joy of flying in a flight simulator is seeing the real world. Where we can have more realistic global scenery, we consider it to be a win, and we are always looking to be more realistic than the last render.

Plausibility for the version 10 render is going to take two forms:

- Bug fixes. Any time something screwy happens, it's not plausible. Sometimes these are code bugs that must be fixed, and sometimes they are data conflicts. For example, the water data sasys "water" but the elevation data says "hill". Combine them and you get water going up a hill. We have to write code to resolve this, somehow.

- We are reworking the way cities are rendered, because even at their best, the old approach, procedural buildings with algorithmic roads over land class photos, did not look plausible, even at its very highest setting. So this is a feature request to fix a plausibility problem.

I've discussed this before (and forgotten about the post). But to expand the discussion, we need to consider not only algorithmic and procedural data processing, but whether we are driven by procedural generation, input data, assets created by artists, or some combination. (In practice, all systems require a mix of data, art assets, and procedures and algorithms, it's a question of the blend.)

I've been working on global scenery for a few years now, and over time I've come to appreciate the importance of artist input (via art assets) into any scenery process. Simply put, if you want scenery to look good, you need to make it reasonably straight forward for people who are good at making pretty pictures to control the look of your visual results. A few years ago I viewed the scenery process as strictly a question of data conversion and visualization, but now I see it as finding a way to merge art assets and data into a cogent final product, with the art assets being used in a way that the artists can control. In practice, this often means making sure that the art assets come in a format that artists are comfortable with or can learn without too much pain.

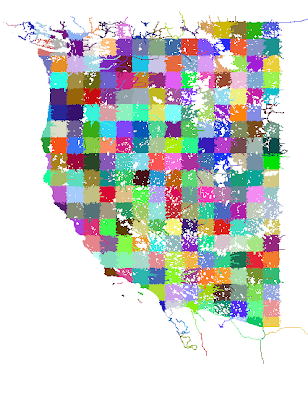

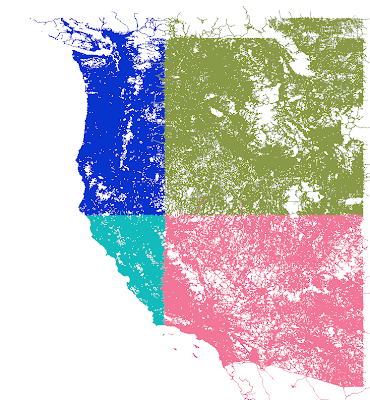

As I said in the previous post, our approach is becoming more algorithmic and less procedural as higher quality source data becomes available. (For example, we don't have to generate European roads when we can import and reprocess them.) But our approach over time has always been heavily artist driven. By this I mean: our input data is algorithmically processed into a final form that makes sense only in the context of art assets, and we have a pretty good idea of what those art assets will look like when we design the algorithms. To use roads as an example again, our task with OSM is to convert OSM road data into a road network that will visualize nicely with road art assets created by an artist.

Procedural Compression

One way to view procedural scenery is "creating lots of information from little or no information". But another way to think of it is as a compression technology. As was correctly pointed out on the org forums, you use less storage specifying the overall location of a forest than you do specifying every tree individually. The compressed form (store the forest location) can be equally plausible. It will be less realistic if the original tree locations were based on real world data, but it will be equally (unrealistic) if the original tree locations were procedurally generated. Put another way, pushing procedural processes out of the scenery generation process and into the flight simulator makes DSFs smaller.

When I first started working on X-Plane 8 DSF scenery, not only was DVD size a factor, but so was load time; we had one core and it wasn't a very fast core. Anything we could do to make loading faster, we did. Thus we pushed a lot of work into the scenery generation process, including procedural processes, to keep load time down.

Times have changed; we now have dual core machines as a baseline, and often quite a few more cores. Thus over time we are starting to move procedural processes back into the simulator, trading load time (which runs on multiple cores) for generation time and file size. So perhaps a more accurate statement would be: our scenery generation process is becoming more algorithmic and less procedural, and X-Plane itself is becoming more procedural. This is driven both by more input data (which must be processed up front) and more compute power on the host (which lets us shrink file size, and thus use DVD space for other things).

X-Plane 10

Here's how this plays out in practice in version 10:

- Some (but not all) of the building placement work* has been moved into X-Plane; a bit of expensive precomputation is still done at DSF generation time.

- Some (but not all) of road processing has been moved into X-Plane; a lot is still done at DSF generation time.

- Where possible, we are moving from a multi-layered approach to terrain to a pixel-shader-based approach to terrain. This cuts down overdraw and uses the GPU more efficiently. (The simplest example: in X-Plane 8 and 9, cliffs have separate terrains from hills. In X-Plane 10, a single terrain sits on both the cliff and the hill and changes its appearance based on the actual slope; this texture change is computed by the GPU.)